Problem Statement

Gesture recognition, i.e., the recognition of pre-defined gestures by arm or hand movements, enables a natural extension of the way we currently interact with devices. With the increasing amount of human-machine interactions, alternative user interfaces will become more important. Commercially available gesture recognition systems are usually pre-trained: the developers specify a set of gestures, and the user is provided with an algorithm that can recognize just these gestures. In order to improve the user experience, it is often desirable to allow users to define their own gestures. In that case, the user needs to train the recognition system herself by performing a set of example gestures. Crucially, this scenario requires learning gestures from just a few training examples in order to avoid overburdening the user.

The first step towards an in-situ trainable gesture recognition system is to create an in-situ trainable gesture classifier.

Methods and Solution Proposal

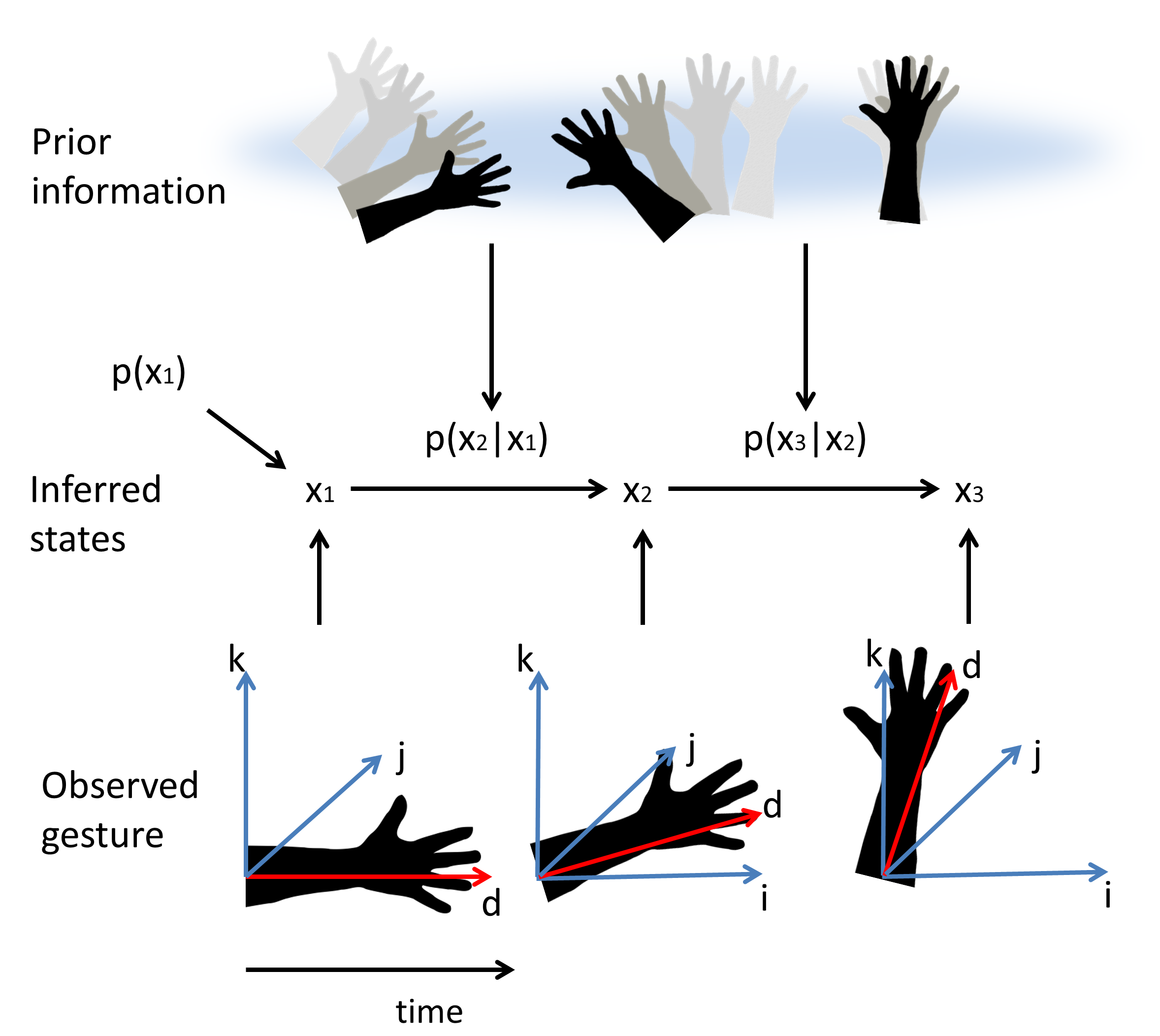

Our proposed gesture classifier is based on Bayesian hidden Markov models. The general idea behind using hidden Markov models is that the measured direction of the arm can be mapped onto discrete states. Instead of saving the discrete states, the algorithm learns the so-called transition probabilities between the states. A transition probability can be viewed as the probability that the arm will go from one direction to another; in other words, it corresponds to the probability of a movement. These transition probabilities are unique for each distinguishable type (class) of gestures. Therefore, when the algorithm learns the transition probabilities for all gesture types, it can later evaluate the probability that a new measurement had been generated by a specific class. This concept has already been successfully applied to gesture recognition in the past. Our contribution is that we use Bayesian hidden Markov models as the basis of our hierarchical model. This allows us to incorporate prior information about gestures, in the form of a prior probability distribution over the transition probabilities. The probabilities for a specific gesture class can then be learned by combining the prior information and the measurements (see Figure 1). When constructed properly, this prior distribution might capture general properties of gestures, which might help to learn gestures by fewer training examples.

Results

We collected a gesture database using a Myo sensor bracelet (see Figure 2). The database contains 17 different gesture classes, each performed 20 times by the same user. The duration of the measurements was fixed to 3 seconds.

The gesture database was split into a training set containing 5 samples of every gesture class, and a test set containing the remaining (15x7=) 255 samples.

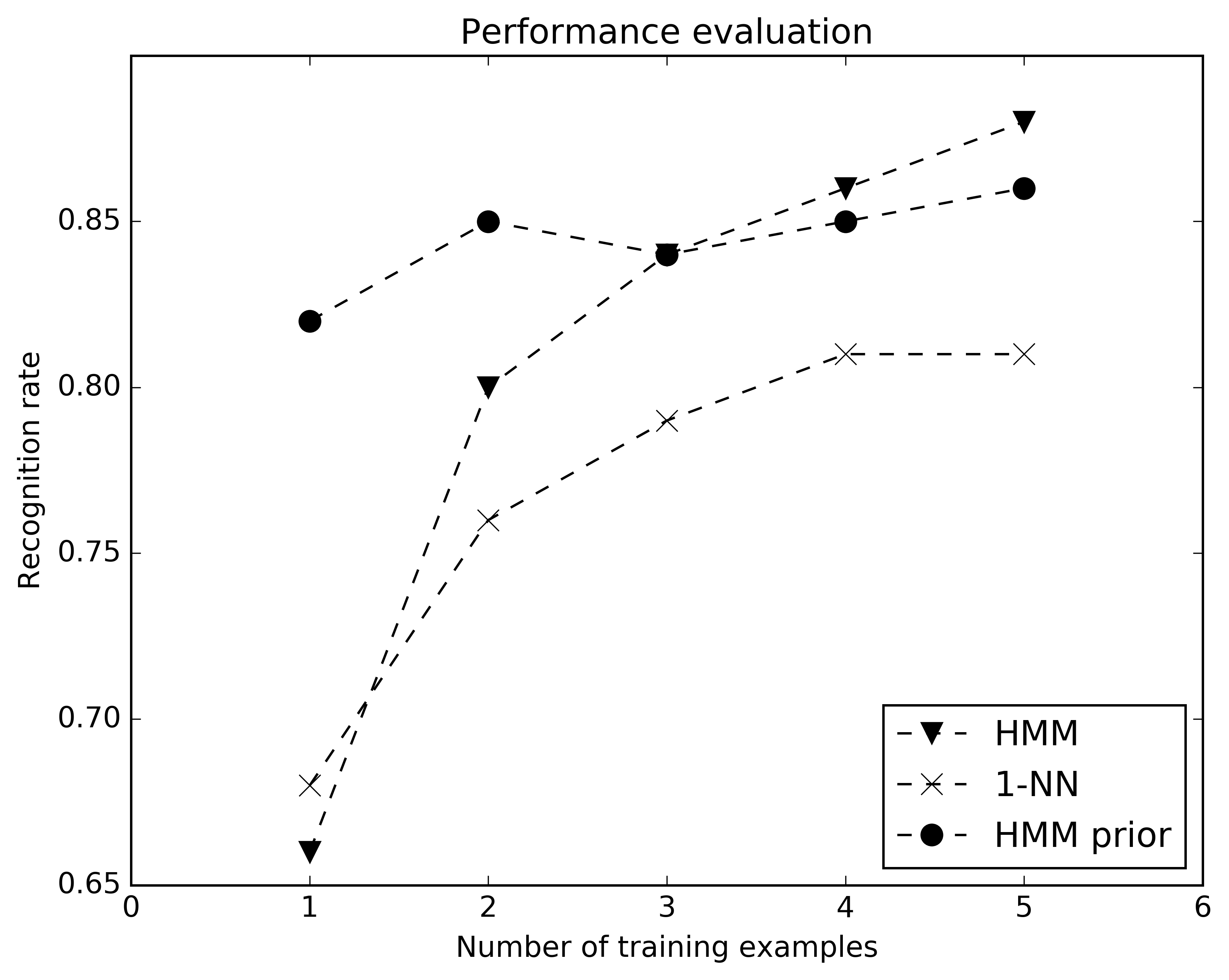

As a measure of performance we used the recognition rate, which is the number of correctly classified recordings divided by the total number of recordings. To test the influence of the constructed prior distribution, we have also evaluated the algorithm with an uninformative prior distribution. As a baseline we used the recognition rate of a 1-Nearest Neighbor classifier (1-NN).

Figure 3 shows the recognition rates of all algorithms. Both hidden Markov based algorithms feature a higher recognition rate than the 1-NN algorithm. In view of our interest for in-situ training of the gesture classifier, we are especially interested in classifier performance as a function of number of training examples. In particular for one training example (one-shot training), the hidden Markov model with learned prior distribution clearly outperforms both alternative models.