Note: This site is currently under construction.

The course “Bayesian Machine Learning and Information Processing” starts in November 2024 (Q2).

Course goals

This course provides an introduction to Bayesian machine learning and information processing systems. The Bayesian approach affords a unified and consistent treatment of many useful information processing systems.

Course summary

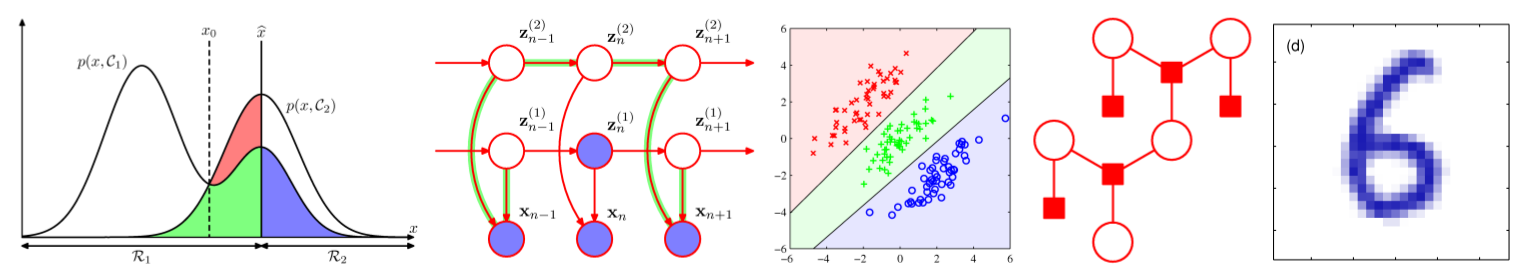

This course covers the fundamentals of a Bayesian (i.e., probabilistic) approach to machine learning and information processing systems. The Bayesian approach allows for a unified and consistent treatment of many model-based machine learning techniques. Initially, we focus on Linear Gaussian systems and will discuss many useful models and applications, including common regression and classification methods, Gaussian mixture models, hidden Markov models and Kalman filters. We will discuss important algorithms for parameter estimation in these models including the Variational Bayes method. The Bayesian method also provides tools for comparing the performance of different information processing systems by means of estimating the Bayesian evidence for each model. We will discuss several methods for approximating Bayesian evidence. Next, we will discuss intelligent agents that learn purposeful behavior from interactions with their environment. These agents are used for applications such as self-driving cars or interactive design of virtual and augmented realities. Indeed, in this course we relate synthetic Bayesian intelligent agents to natural intelligent agents such as the brain. You will be challenged to code Bayesian machine learning algorithms yourself and apply them to practical information processing problems.

News and Announcements

- (13-Nov-2024) Please sign up for Piazza (Q&A platform) at signup link. As much as possible we will use the Piazza site for new announcements as well.

Instructors

- Prof.dr.ir. Bert de Vries (email: bert.de.vries@tue.nl) is the responsible instructor for this course and teaches the lectures with label B.

- Dr. Wouter Kouw (w.m.kouw@tue.nl) teaches the probabilistic programming lectures with label W.

- Tim Nisslbeck, Sepideh Adamiat, Wouter Nuijten and Fons van der Plas are the teaching assistants.

Materials

In principle, you can download all needed materials from the links below.

Lecture Notes

The lecture notes are mandatory material for the exam:

- Bert de Vries (2023), PDF bundle of all lecture notes for lessons B0 through B12.

- Wouter Kouw (2023), PDF bundle of all probabilistic programming lecture notes for lessons W1 through W4.

Books

The following book is optional but very useful for additional reading:

Software

Please follow the software installation instructions. If you encounter any problems, please contact us in class or on Piazza.

Lecture notes, exercises, assignment and video recordings

You can access all lecture notes, assignments, videos and exercises online through the links below:

| Date | lesson | materials | |||

|---|---|---|---|---|---|

| lecture notes | exercises | assignments | video recordings | ||

| 13-Nov-2024 (Wednesday) | B0: Course Syllabus B1: Machine Learning Overview |

B0, B1 | B0, B1 | ||

| 15-Nov-2024 | B2: Probability Theory Review | B2 | B2-ex B2-sol |

B2.1, B2.2 | |

| 20-Nov-2024 | B3: Bayesian Machine Learning | B3 | B3-ex B3-sol |

B3.1, B3.2 | |

| 22-Nov-2024 | B4: Factor Graphs and the Sum-Product Algorithm | B4 | B4-ex B4-sol |

B4.1, B4.2 | |

| 27-Nov-2024 | Introduction to Julia | W0 | |||

| 27-Nov-2024 | Pick-up Julia programming assignment A0 | A0 | |||

| 29-Nov-2024 | B5: Continuous Data and the Gaussian Distribution | B5 | B5-ex B5-sol |

B5.1, B5.2 | |

| 04-Dec-2024 | B6: Discrete Data and the Multinomial Distribution | B6 | B6-ex B6-sol |

B6 | |

| 06-Dec-2024 | Probabilistic Programming 1 - Bayesian inference with conjugate models | W1 | W1.1, W1.2 | ||

| 06-Dec-2024 | Submission deadline assignment A0 | link | |||

| 06-Dec-2024 | Pick-up probabilistic programming assignment A1 | A1 | |||

| 11-Dec-2024 | B7: Regression | B7 | B7-ex B7-sol |

B7.1, B7.2 | |

| 13-Dec-2024 | B8: Generative Classification B9: Discriminative Classification |

B8, B9 | B8-9-ex B8-9-sol |

B8, B9 | |

| 18-Dec-2024 | Probabilistic Programming 2 - Bayesian regression & classification | W2 | W2.1, W2.2 | ||

| 20-Dec-2024 | B10: Latent Variable Models and Variational Bayes | B10 | B10-ex B10-sol |

B10.1, B10.2 | |

| 20-Dec-2024 | Submission deadline assignment A1 | link | |||

| break | |||||

| 08-Jan-2025 | Probabilistic Programming 3 - Variational Bayesian inference | W3 | W3.1, W3.2 | ||

| 10-Jan-2025 | B11: Dynamic Models | B11 | B11-ex B11-sol |

B11 | |

| 10-Jan-2025 | Pick-up probabilistic programming assignment A2 | A2 | |||

| 15-Jan-2025 | Probabilistic Programming 4 - Bayesian filters & smoothers | W4 | W4.1, W4.2 | ||

| 17-Jan-2025 | B12: Intelligent Agents and Active Inference | B12, slides |

B12-ex B12-sol |

B12.1, B12.2 | |

| 24-Jan-2025 | Submission deadline assignment A2 | link | |||

| 30-Jan-2025 | written examination (13:30-16:30) | ||||

| 17-Apr-2025 | resit written examination (18:00-21:00) | ||||

Exams & Assignments

Preparation

- Consult the Course Syllabus (lecture notes for 1st class) for advice on how to study the materials.

-

In addition to the materials in the above table, we provide two representative practice written exams:

- 3-Feb-2022: exam ; answers ; calculations

- 2-Feb-2023: exam ; answers ; calculations

Programming Assignments

- Programming assignments can be downloaded and submitted through the links in the above table.

Grading

- The final grade is composed of the results of assignments A1 (10%), A2 (10%), and a final written exam (80%). The grade will be rounded to the nearest integer.