The 2020/21 course “Bayesian Machine Learning and Information Processing” will start in November 2020 (Q2).

Course summary

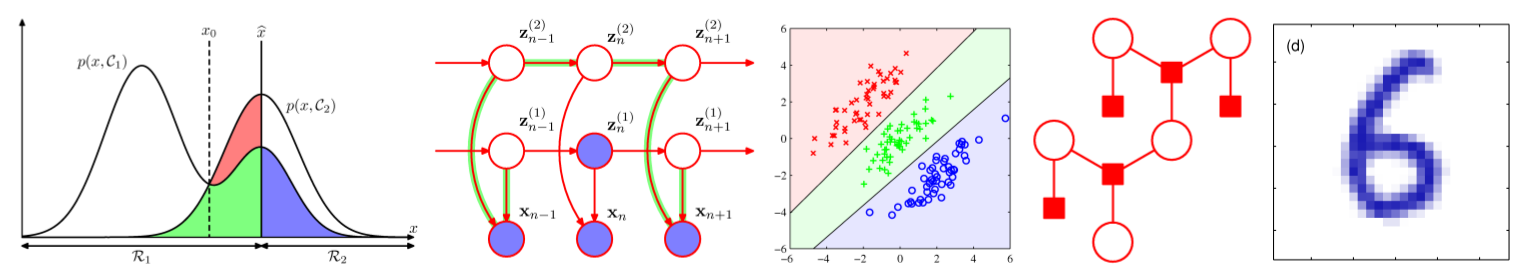

This course covers the fundamentals of a Bayesian (i.e., probabilistic) approach to machine learning and information processing systems. The Bayesian approach allows for a unified and consistent treatment of many model-based machine learning techniques. We focus on Linear Gaussian systems and will discuss many useful models and applications, including common regression and classification methods, Gaussian mixture models, hidden Markov models and Kalman filters. We will discuss important algorithms for parameter estimation in these models including the Expectation-Maximization (EM) algorithm and Variational Bayes (VB). The Bayesian method also provides tools for comparing the performance of different information processing systems by means of estimating the ``Bayesian evidence’’ for each model. We will discuss several methods for approximating Bayesian evidence. Next, we will discuss intelligent agents that learn purposeful behavior from interactions with their environment. These agents are used for applications such as self-driving cars or interactive design of virtual and augmented realities. Indeed, in this course we relate synthetic Bayesian intelligent agents to natural intelligent agents such as the brain. You will be challenged to code Bayesian machine learning algorithms yourself and apply them to practical information processing problems.

Course goals

This course provides an introduction to Bayesian machine learning and information processing systems. The Bayesian approach affords a unified and consistent treatment of many useful information processing systems.

News and Announcements

- As much as possible we use the Piazza course site for new announcements.

Instructors

- Prof.dr.ir. Bert de Vries (email: bert.de.vries@tue.nl) is the responsible instructor for this course and teaches all lectures with label B.

- Dr. Wouter Kouw (w.m.kouw@tue.nl) teaches all practical sessions on probabilistic programming with label W.

- Ismail Senoz, MSc (i.senoz@tue.nl), and Magnus Koudahl, MSc (m.t.koudahl@tue.nl) are teaching assistants. Mr. Koudahl presents the “What is Life?” bonus lecture.

Materials

In principle, you can download all needed materials from the links below.

Books

Please download the following books/resources:

- Christopher M. Bishop (2006), Pattern Recognition and Machine Learning. You can also buy a hardcopy, e.g. at bol.com.

- Ariel Caticha (2012), Entropic Inference and the Foundations of Physics.

- Bert de Vries et al. (2020), PDF bundle of lecture notes for lessons B0 through B12 (Ed. Q3-2019/20).

- The lecture notes may change a bit during the course, e.g., to process comments by students. A final PDF version will be posted after the last lecture.

- Wouter Kouw (2020), Julia and Jupyter Install Guide.

- Use this guide if you need help to install Julia and Jupyter, so that you can open and run the course notebooks on your own machine.

- You can test your installation by running the notebook called “Probabilistic-Programming-0.ipynb”, which can be downloaded from github (under

lessons/notebooks/probprog). Here is a video with step-by-step instructions on opening course notebooks.

Lecture notes and videos

The source files for the lecture notes are accessible on github. If you want to download them, click the green Code button and then Download ZIP. The theory lectures are under lessons/notebooks and the programming notebooks are under lessons/notebooks/probprog. Note that you don’t have to download them, you can view all lecture notes online through the links below:

| Date | lesson | materials | ||

|---|---|---|---|---|

| video guides | lecture notes | live class recordings | ||

| 11-Nov-2020 | B0: Course Syllabus and Administrative Issues B1: Machine Learning Overview |

B1 | B0, B1 | B0 |

| 13-Nov-2020 | B2: Probability Theory Review | B2.1, B2.2 | B2 | B2 |

| 18-Nov-2020 | B3: Bayesian Machine Learning | B3.1, B3.2 | B3 | B3 |

| 20-Nov-2020 | W1: Probabilistic Programming 1 - Intro Bayesian ML | W1.1, W1.2, W1.3 | W1 | W1 |

| 25-Nov-2020 | B4: Factor Graphs and the Sum-Product Algorithm | B4 | B4 | B4 |

| 27-Nov-2020 | B5: Continuous Data and the Gaussian Distribution | B5.1, B5.2, B5.3 | B5 | B5 |

| 02-Dec-2020 | B6: Discrete Data and the Multinomial Distribution | B6 | B6 | B6, review B1-B6 |

| 04-Dec-2020 | W2: ProbProg 2 - MP & Analytical Bayesian Solutions | W2.1, W2.2, W2.3 | W2 | W2 |

| 09-Dec-2020 | B7: Regression | B7 | B7 | B7 |

| 11-Dec-2020 | B8: Generative Classification B9: Discriminative Classification |

B8, B9 | B8, B9 | B8-9 |

| 16-Dec-2020 | W3: ProbProg 3 - Regression and Classification | W3.1, W3.2 | W3 | W3 |

| 18-Dec-2020 | B10: Latent Variable Models and Variational Bayes | B10 | B10 | B10 |

| break | ||||

| 06-Jan-2021 | B11: Dynamic Models | B11 | B11 | B11 |

| 08-Jan-2021 | B12: Intelligent Agents and Active Inference | B12 | B12 | B12 |

| 13-Jan-2021 | W4: ProbProg 4: Latent Variable and Dynamic Models | W4.1, W4.2, W4.3 | W4 | PP4 |

| 15-Jan-2021 | M1: Bonus Lecture: What is Life? | M1 | M1 | |

Q&A

Q&A for each lesson can be accessed at the Piazza course site.

Exercises

In preparation for the exam, we recommend that you work through the following exercises to test your understanding of the materials:

Please feel free to consult the following matrix and Gaussian cheat sheets (by Sam Roweis) when doing the exercises.

Exam Guide

Each year there will be two exam opportunities. Check the official TUE course site for exam schedules. In the Q2-2020 course, your performance will be assessed by a WRITTEN EXAMINATION, which (very likely) will be offered both online (with proctoring software) and offline (on campus, if the situation allows it).

You cannot bring notes or books to the exam. All needed formulas are supplied at the exam sheet.